Dummies guide to GPU/ computer terminology

In January, leakers showed the “real” specs of the RTX 4090 Ti with information like CUDA cores, VRAM, and expected wattage for people’s information with more speculation on what the price could be. (Note: This is the computers we are talking about).

With all this information about CUDA cores and many other things that may confuse non-tech-savvy people, some may want a starter guide to make sense of all this information.

Many people confuse GPUs and Graphics Cards to be the same and in a way they sort of are the same. Even when tech-savvy people talk about these two they may interchange in what words they use. However, they do differ in what they represent.

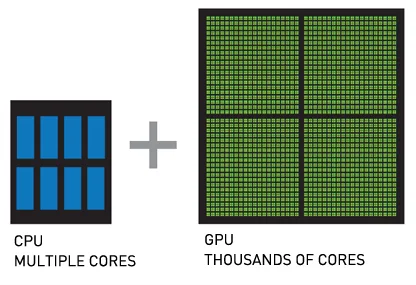

A GPU stands for Graphics Processing Unit and its job is to use information fed to it to create a picture on a screen. It is not to be confused with a CPU which is the Central Processing Unit. The GPU differs from the CPU in that it is specialized for graphics coding allowing it to be very fast in just that segment.

Are you still with me? Alright good because it’s only getting more specific.

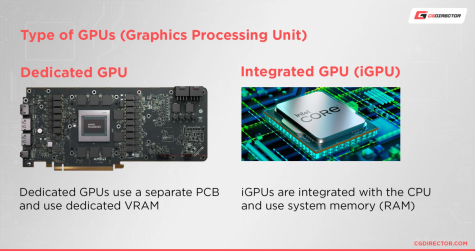

A Graphics Card is what contains the GPU. Not all computers have a Graphics Card as they can opt-in for just a GPU that is soldered with the CPU. The Graphics Cards job is to serve as the casing and cooling of the GPU. These Graphics cards are often way quicker than an iGPU as with a casing they can have way more sophisticated cooling and take up more space for processing. They can also use way more power as well as they don’t share a slot with the CPU allowing for even more speed.

Now there are terms like iGPU and dGPU. An iGPU stands for Integrated GPU which refers to the GPUs included with some CPUs. The dGPU stands for Dedicated GPU or effectively another term for a Graphics Card. The dGPU is often used when talking about laptops that have dedicated graphics but can’t fit a full-size Graphics card.

Now there are terms like iGPU and dGPU. An iGPU stands for Integrated GPU which refers to the GPUs included with some CPUs. The dGPU stands for Dedicated GPU or effectively another term for a Graphics Card. The dGPU is often used when talking about laptops that have dedicated graphics but can’t fit a full-size Graphics card.

Do you understand? Alright then, can some computers not have a GPU? No, all computers need some sort of GPU in order to display a screen, otherwise, the computer display won’t output anything.

Do you understand? Alright then, can some computers not have a GPU? No, all computers need some sort of GPU in order to display a screen, otherwise, the computer display won’t output anything.

Let’s talk about the names of Graphics Cards for starters. There are currently 3 main Graphics Card companies which are NVIDIA, AMD, and Intel. NVIDIA is the oldest company making Graphics Cards since 1997, AMD is the second oldest company making Graphics Cards since 2007, and Intel is starting again making Graphics cards in 2022, after 24 years.

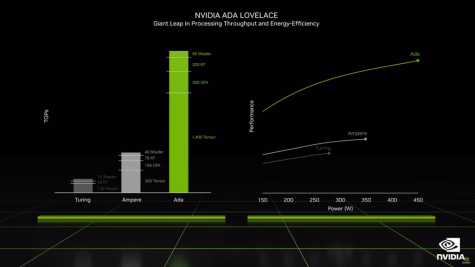

NVIDIA currently has used the naming schemes of RTX2000, 3000, and 4000, in order of what is more recent. They are then correlated with a #050, #060, #070, #080, #090 and may have a Ti or not. Ti means a better version of the GPU. The RTX 2000 series uses Ti or Super to indicate a better graphics card. RTX 2000 uses Turing platform, RTX3000 uses Ampere platform, and RTX 4000 uses Ada.

Currently, AMD uses RX 5000, RX6000, and RX7000, in order of what is more recent, to name their Graphics Cards. They use XT or XTX at the end to indicate better graphics cards. They also use a similar pattern to NVIDIA but instead of it being #050, it’s #500 and can add a #050 to also indicate a better graphics card. A more complicated way of naming their Graphics Cards I know.

Intel uses Intel Arc A300, A500, and A700 for their new Graphics Cards. They use a secondary number to indicate how good the graphics card is, which varies by the series but a higher number means better.

All of these companies make dedicated GPU’s scaled smaller for laptops. AMD and Intel put an M after their GPU indicating mobile while NVIDIA decides not to indicate an M on the box, rather tells you Laptop GPU when you enter system specifications.

One more test! What do RX and RTX mean on AMD and NVIDIA Graphics Cards? They are intended to advertise how their GPUs are now capable of ray-tracing technology in games. That in itself is a whole complicated issue.

Your donation will support the student journalists of Parkdale High School. Your contribution will allow us to cover our annual website hosting costs and publish some printed editions, as well.

Christian Galvez is a Sophomore in the 2025 class, and this is his first year on staff. He tries to get readers to understand computers better but does...

gordon • Mar 28, 2023 at 7:33 am

This article is very informative! These computer terms used to sound like a foreign language but now they don’t.